I recently had the need to provide some telemetry numbers regarding an application that is instrumented with Application Insights. The Azure documentation includes a page that details how to export an analytics query to PowerBI, which is quite helpful. However, the Application Insights service has data only for 90 days, with the recommendation to use continuous export to retain data longer. The continuous export capability will write to Blob Storage, and from there it is our responsibility to handle the data appropriately.

Making this data in Blob Storage available in a PowerBI dataset is possible, and this post will go thru the steps. While the steps are not necessarity complicated, they were unfamiliar to me since I don't use Data Analysis Expressions (DAX) very often. ;)

At a high-level, the steps to get the data:

- Connect PowerBI to the Storage Account and select the Blob container that contains the exported data

- Get the contents of each relevant blob

- Parse the JSON that is the blob contents, extracting relevant properties

- Review data types and relations

The PowerBI desktop application offers both a WYSIWYG and editor experience for creating the DAX required. I will flip back and forth in an attempt to explain what is happening.

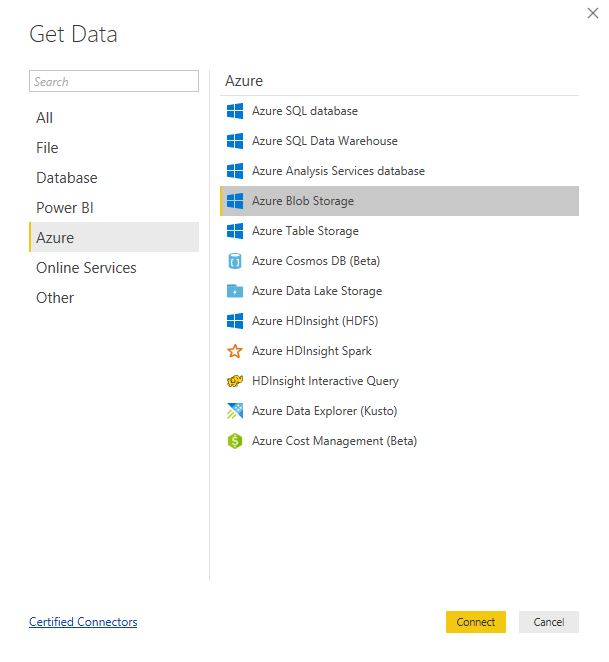

Connect PowerBI to Azure Blob storage

This step is quite straightforward. In PBIDesktop, select Get Data from the ribbon and select Azure Blob Storage from the list.

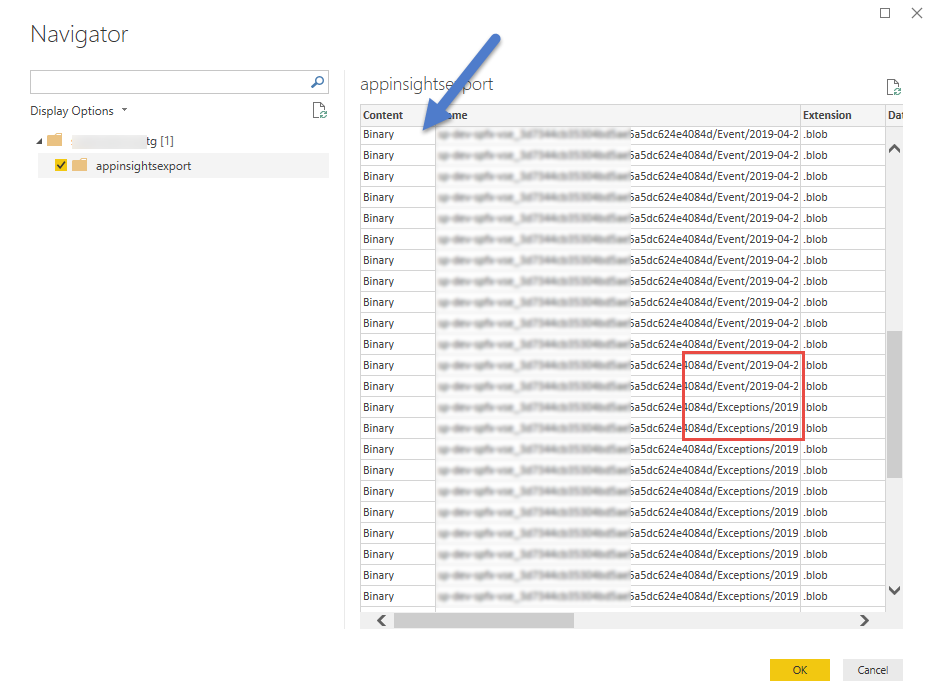

In the resulting Navigator dialog, select the blob container.

Notice a few things in data preview:

- PowerBI sees the content as Binary (blue arrow), so does nothing further with it

- The continuous export process uses slashes in the filename, and uses the telemetry item type as an identifier (red rectangle). In my experience, the JSON payload differs between item types.

After completing the Navigator dialog, the query editor will show the blob list. In the Query Settings pane on the right side, the query name is "appinsightsexport", which was set by PowerBI to match the blob container. The Applied Steps will contain two entries: Source and Navigation. We selected a source, and navigated in that source to the location of the data.

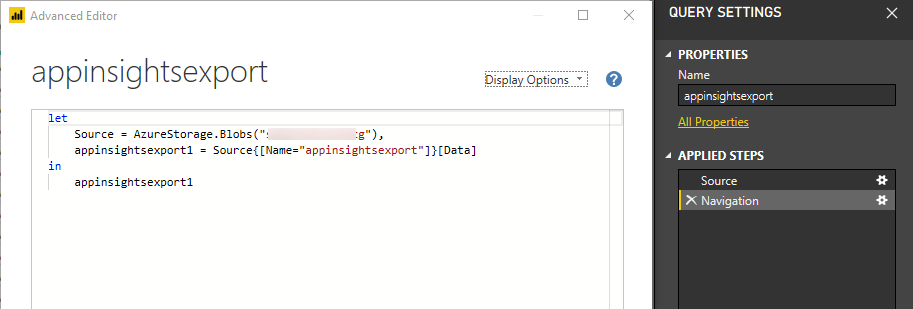

Looking at the Advanced Editor (on the View tab of the ribbon), we can see these two steps represented as DAX expressions.

The DAX expression declares a variable named Source which is a "navigational table". It then assigns the data in the table to a variable named appinsightsexport1. As we progress with shaping the data this is a common pattern. A function is invoked that uses the in-memory table data represented by a variable and the result is assigned to a new variable. These variable names are displayed in the Applied Steps list in the Query Properties pane, so provide meaningful names.

Using the PowerQuery editor, select the chevron to the right of the Name column and select Text Filters > Contains. In this example, we are keeping rows where the Name column contains the value Event. This will exclude other telemetry types (Dependencies, Trace, etc.).

The resulting query source has another line to filter the appinsightsexport1 data:

let

Source = AzureStorage.Blobs("stg"),

appinsightsexport1 = Source{[Name="appinsightsexport"]}[Data],

#"Filtered Rows" = Table.SelectRows(appinsightsexport1, each Text.Contains([Name], "Event"))

in

#"Filtered Rows"

The PowerBI application created a variable containing a space, so the query delimits the variable using the pattern #"Variable with space". When working directly with the query source, I use Pascal-cased variables without spaces. I find the query easier to read using that approach.

Get the contents of each relevant blob

At this point, our dataset is a table of blob names. What we want is all the JSON entries inside those blobs. The PowerQuery editor has the capability to Combine Files. But the wizard assumes the files contain comma-separated values. (The documentation states that the first file is analyzed to determine the type, but that does not work in my experience.) Instead, we will use the advanced editor to extend the query.

let

Source = AzureStorage.Blobs("stg"),

appinsightsexport1 = Source{[Name="appinsightsexport"]}[Data],

FilteredRows = Table.SelectRows(appinsightsexport1, each Text.Contains([Name], "Event")),

ReadContent = Table.AddColumn(FilteredRows, "JsonContent", each Lines.FromBinary([Content],null,null,1252)),

RemovedOtherColumns = Table.SelectColumns(ReadContent, {"JsonContent"})

in

RemovedOtherColumns

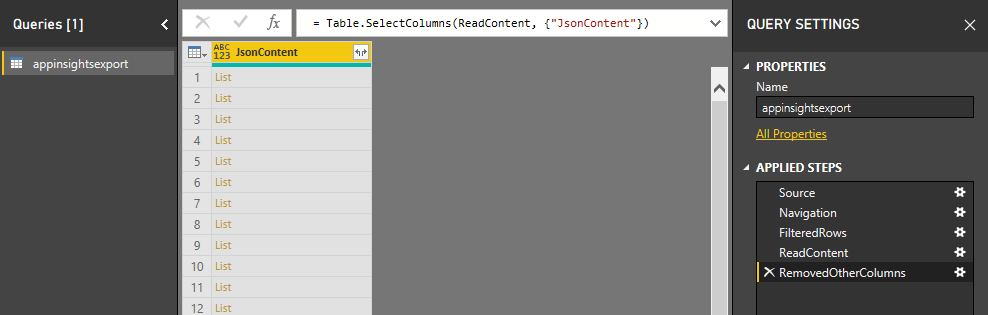

The in-memory table is updated with a new column named "JsonContent". The new column is populated with the expression each Lines.FromBinary([Content],null,null,1252). (For each row in the table, read the binary column named Content and store it in the column named JsonContent.) The in-memory table is then updated to include only the JsonContent column.

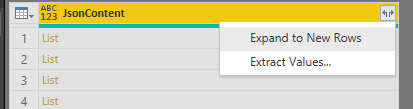

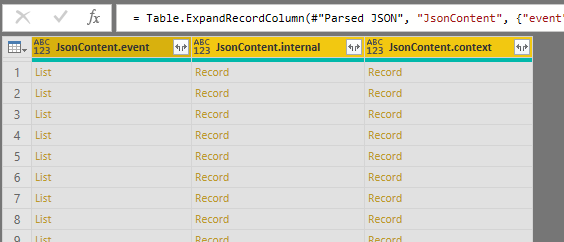

Our JsonContent column is a Structured Column. In the preview, the values are shown as "List" because the JSON content could potentially contain multiple objects. Since we want all of that data, we Expand the column to new rows.

Our query has these steps applied:

let

Source = AzureStorage.Blobs("stg"),

appinsightsexport1 = Source{[Name="appinsightsexport"]}[Data],

FilteredRows = Table.SelectRows(appinsightsexport1, each Text.Contains([Name], "Event")),

ReadContent = Table.AddColumn(FilteredRows, "JsonContent", each Lines.FromBinary([Content],null,null,1252)),

RemovedOtherColumns = Table.SelectColumns(ReadContent, {"JsonContent"}),

ExpandedJsonContentList = Table.ExpandListColumn(RemovedOtherColumns, "JsonContent")

in

ExpandedJsonContentList

At this point, our in-memory table contains a single column of text (which is the JSON content) from all of the blobs that match our filter. We can now parse the JSON.

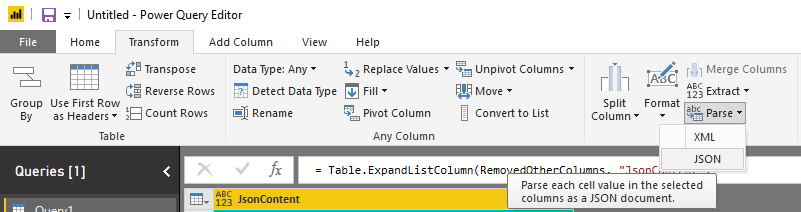

Parse the JSON and extract relevant properties

The query editor has a Parse option on the Transform tab of the Ribbon. This will convert the selected column from a string to a Record. We can then expand the record (object) to show the component properties as columns.

Before going further, we need to understand the shape of the JSON blobs. For the AppInsights events data that I am processing, the files have this basic structure.

{

"event":[],

"internal":{},

"context":{}

}

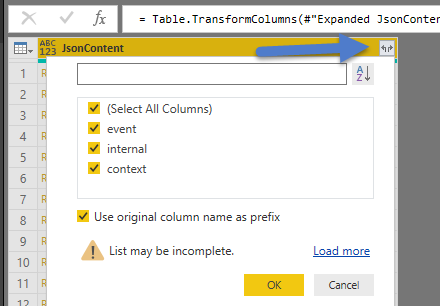

The key point is that the event property is an array, while the others are objects. When PowerBI parses the JSON content, an object is shown as a record while arrays are shown as list.

Understanding the Expand Column behavior

When a List column is expanded, a new row in the table is created for each item in the list. You need to determine if this behavior will skew your results. Another behavior to consider is the naming of the columns. When expanding using the Ribbon, there is a checkbox asking if the expanded columns should be prefixed with the parent column name. For JSON with a deep set of objects, this can be difficult to follow, since you need to expand the width of the column in the preview to see the complete name.

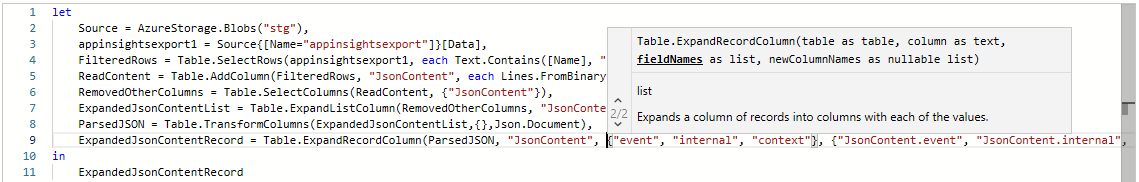

Using the Advanced Query window, we have complete control over the naming of columns, and we can specify which properties to expand. Notice the intellisense popup showing the function parameters.

Extracting relevant properties

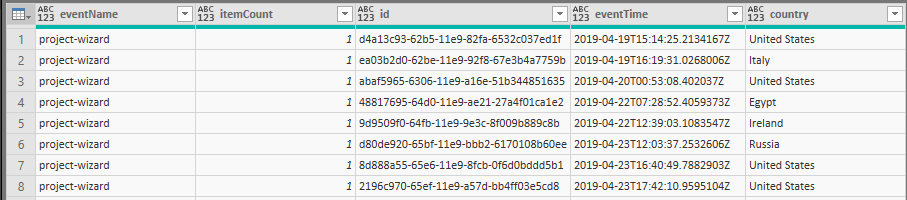

Continuing with the parsing of the JSON object, the properties that my scenario requires are:

- event.name

- event.count

- internal.data.id

- context.data.eventTime

- context.location.country

- context.custom.dimensions

Setting the custom dimensions aside for the moment, the query so far, which includes the expansion of objects:

let

Source = AzureStorage.Blobs("stg"),

appinsightsexport1 = Source{[Name="appinsightsexport"]}[Data],

FilteredRows = Table.SelectRows(appinsightsexport1, each Text.Contains([Name], "Event")),

ReadContent = Table.AddColumn(FilteredRows, "JsonContent", each Lines.FromBinary([Content],null,null,1252)),

RemovedOtherColumns = Table.SelectColumns(ReadContent, {"JsonContent"}),

ExpandedJsonContentList = Table.ExpandListColumn(RemovedOtherColumns, "JsonContent"),

ParsedJSON = Table.TransformColumns(ExpandedJsonContentList,{},Json.Document),

ExpandedJsonContentRecord = Table.ExpandRecordColumn(ParsedJSON, "JsonContent", {"event", "internal", "context"}, {"event", "internal", "context"}),

ExpandedEventList = Table.ExpandListColumn(ExpandedJsonContentRecord, "event"),

ExpandedEventRecord = Table.ExpandRecordColumn(ExpandedEventList, "event", {"name", "count"}, {"eventName", "itemCount"}),

ExpandedInternal = Table.ExpandRecordColumn(ExpandedEventRecord, "internal", {"data"}, {"internal.data"}),

ExpandedInternalData = Table.ExpandRecordColumn(ExpandedInternal, "internal.data", {"id"}, {"id"}),

ExpandedContext = Table.ExpandRecordColumn(ExpandedInternalData, "context", {"data", "location"}, {"context.data", "context.location"}),

ExpandedContextData = Table.ExpandRecordColumn(ExpandedContext, "context.data", {"eventTime"}, {"eventTime"}),

ExpandedContextLocation = Table.ExpandRecordColumn(ExpandedContextData, "context.location", {"country"}, {"country"})

in

ExpandedContextLocation

Our in-memory table now contains rows for each event, with columns for each property.

Custom dimensions

The custom dimensions property is stored as an array of key-value pairs:

{

"context": {

"custom": {

"dimensions": [

{

"componentType": "webpart"

},

{

"framework": "react"

},

{

"environment": "spo"

},

{

"skipInstall": "False"

}

]

}

}

}

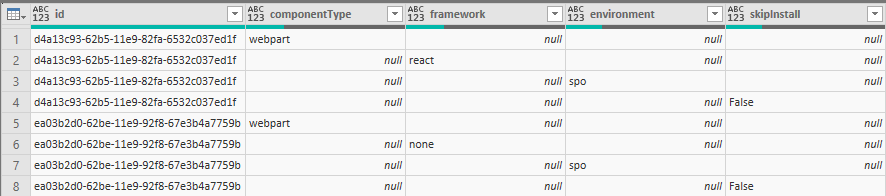

As we have seen, arrays are treated as lists, and when expanded will create new rows for each item in the list. In this example, each event name, id, time, etc. will be repeated for each item in the dimensions array. In effect the table will have 4 records for each event. This will certainly skew the numbers in my scenario!

The solution is to extract the dimensions in to a separate table using a separate query. We can then use the PowerBI capabilities to join the tables.

Using the Query Settings pane on the right, change the Query name to CustomEvents. From the Advanced Query window, copy the query source and save it for later. Then, select Close & Apply in the ribbon to save the CustomEvents query and load the data into PowerBI.

To get the Custom Dimensions, we go thru the Get Data process again. Since we have the query source, we can use the Blank Query template. In the new query, switch to the Advanced Editor and paste the query source. However, in this new query, we will remove most of the ExpandRecordColumn calls. We will keep the first 8 lines, plus the call to expand the "internal.id" property. In addition, we will expand the context.data.custom.dimensions property.

let

Source = AzureStorage.Blobs("spdevspfxvsestg"),

appinsightsexport1 = Source{[Name="appinsightsexport"]}[Data],

FilteredRows = Table.SelectRows(appinsightsexport1, each Text.Contains([Name], "Event")),

ReadContent = Table.AddColumn(FilteredRows, "JsonContent", each Lines.FromBinary([Content],null,null,1252)),

RemovedOtherColumns = Table.SelectColumns(ReadContent, {"JsonContent"}),

ExpandedJsonContentList = Table.ExpandListColumn(RemovedOtherColumns, "JsonContent"),

ParsedJSON = Table.TransformColumns(ExpandedJsonContentList,{},Json.Document),

ExpandedJsonContentRecord = Table.ExpandRecordColumn(ParsedJSON, "JsonContent", {"internal", "context"}, {"internal", "context"}),

ExpandedInternal = Table.ExpandRecordColumn(ExpandedJsonContentRecord, "internal", {"data"}, {"internal.data"}),

ExpandedInternalData = Table.ExpandRecordColumn(ExpandedInternal, "internal.data", {"id"}, {"id"}),

ExpandedContext = Table.ExpandRecordColumn(ExpandedInternalData, "context", {"custom"}, {"custom"}),

ExpandedCustom = Table.ExpandRecordColumn(ExpandedContext, "custom", {"dimensions"}, {"dimensions"}),

ExpandedDimensionsList = Table.ExpandListColumn(ExpandedCustom, "dimensions"),

ExpandedDimensionsRecord = Table.ExpandRecordColumn(ExpandedDimensionsList, "dimensions", {"componentType", "framework", "environment", "skipInstall"}, {"componentType", "framework", "environment", "skipInstall"})

in

ExpandedDimensionsRecord

As expected, we have four rows per Id, with each row containing one of the custom dimensions.

At this point, the standard group by/aggregate functions can get us to one row per id.

GroupedRows = Table.Group(ExpandedDimensionsRecord, {"id"}, {{"componentType", each List.Max([componentType]), type text}, {"framework", each List.Max([framework]), type text}, {"environment", each List.Max([environment]), type text}, {"skipInstall", each List.Max([skipInstall]), type text}})

Save this second query as CustomDimensions. Close & Apply.

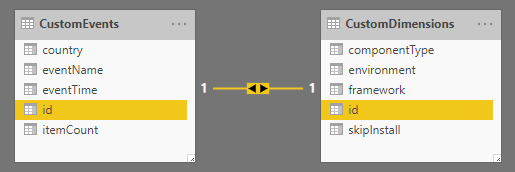

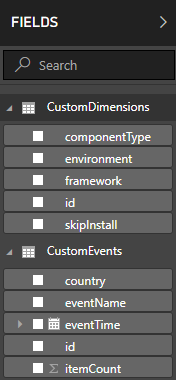

Review data types and relations

Back in the main PowerBI window, select the Model button in the left navigation.

In the model view, the two queries should be joined automatically on the id field. If the relation did not happen automatically, the Model tab in the ribbon has an option to Manage Relationships.

One last item in the data model -- all of the fields from the parsed JSON will be strings. Depending on your scenario, you may need to modify the data types. In my scenarion, I changed the eventTime to datetimezone and itemCount to integer.

ChangedType = Table.TransformColumnTypes(ExpandedContextLocation,{{"eventTime", type datetimezone}, {"itemCount", Int64.Type}})

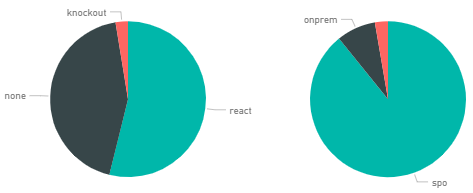

With these changes complete, the data sets are ready to be used in visuals.

Summary

This blog post has covered the steps necessary to data exported from Application Insights in PowerBI. This is an important step in understanding how your applications are being used in production.