I stumbled a bit today when trying to access a blob in Azure Storage. I made an assumption about the permissions granted to my organizational account. If you are transitioning your code to use Azure.Identity, this post may be helpful.

In the past, our code would typically access a storage account using a connection string. Rather than store a connection string, I recommend using a service principal and RBAC. (Read the docs for a discussion of Authorizing access to data in Azure Storage.) The latest SDKs help use this approach.

Recommended Approach: Azure.Identity TokenCredential

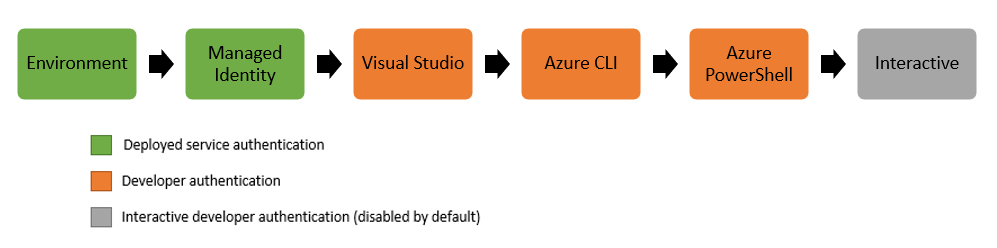

The Azure SDK team has done a good job of describing how the Azure.Identity credential classes can acquire a token for services that support Azure AD authorization. The following paragraph and image are copied from the docs:

DefaultAzureCredential

The DefaultAzureCredential is appropriate for most scenarios where the application is intended to ultimately be run in the Azure Cloud. This is because the DefaultAzureCredential combines credentials commonly used to authenticate when deployed, with credentials used to authenticate in a development environment. The DefaultAzureCredential will attempt to authenticate via the following mechanisms in order.

Cannot access a blob

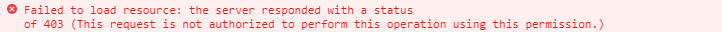

With my code updated, and my Visual Studio account updated, I ran my program. Instead of getting the blob data, I received an error:

This request is not authorized to perform this operation using this permission.

Status: 403 (This request is not authorized to perform this operation using this permission.)

ErrorCode: AuthorizationPermissionMismatch

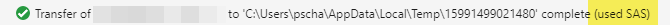

What baffled me was that I can use the Storage Explorer (preview) blade in the portal to view the blob metadata. And, I can use the Storage Explorer application to download the blob.

Those tools succeed because they authenticate to Azure Storage differently! In the portal, I was accessing the metadata, not the blob contents. (In Azure-speak, I was accessing the control-plane, not the data-plane.) In the Storage Explorer application, I connected to the storage account by browsing the resources in the subscription. Again, via the control-plane. I could download the blob because the application uses an SAS token. I just didn't notice it!

In the Azure Portal, clicking the download button didn't work, because of the same issue as my application.

Root cause of the permission issue

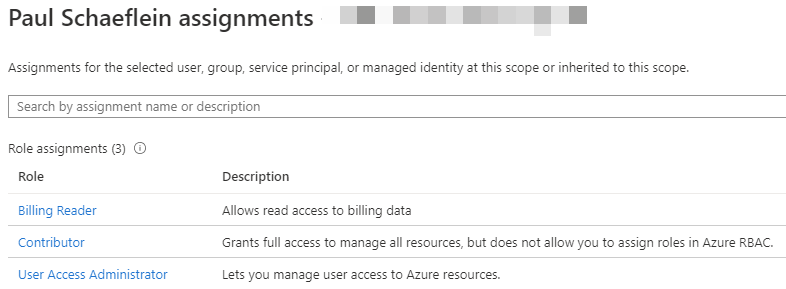

The fix is to assign a role to the account used to access the data. In production, this will be the service principal created by the managed identity for the hosting service. In development, as shown in the image above, that is the account I used in Visual Studio.

In the portal, this is the Access Control (IAM) blade. But wait!! I am already a contributor to the resource!

The core issue - the contributor role applies to the control-plane, not the data-plane.

Referring again to the documentation:

Azure built-in roles for blobs and queues

Azure provides the following Azure built-in roles for authorizing access to blob and queue data using Azure AD and OAuth:

- Storage Blob Data Owner: Use to set ownership and manage POSIX access control for Azure Data Lake Storage Gen2. For more information, see Access control in Azure Data Lake Storage Gen2.

- Storage Blob Data Contributor: Use to grant read/write/delete permissions to Blob storage resources.

- Storage Blob Data Reader: Use to grant read-only permissions to Blob storage resources.

- Storage Blob Delegator: Get a user delegation key to use to create a shared access signature that is signed with Azure AD credentials for a container or blob.

Only roles explicitly defined for data access permit a security principal to access blob or queue data. Built-in roles such as Owner, Contributor, and Storage Account Contributor permit a security principal to manage a storage account, but do not provide access to the blob or queue data within that account via Azure AD.

And, continuing that section:

However, if a role includes the Microsoft.Storage/storageAccounts/listKeys/action, then a user to whom that role is assigned can access data in the storage account via Shared Key authorization with the account access keys. For more information, see Use the Azure portal to access blob or queue data.

This last bit is why the Storage Explorer was able to create an SAS token and download the file.

Fixing the issue

Adding my account to the appropriate data-plane role solved the issue. (It took a few minutes for the update in the portal to take effect. Grab a cup of coffee before re-running your operation.)

A few take-aways:

- From a data security perspective, I fell into a pit-of-success. 👍

- Just because you can see an Azure resource in the portal does not mean you can access its contents using Azure AD. (Control-plane != Data-plane)

- Despite this stumble, transitioning to Azure.Identity + RBAC is pretty straight-forward

- If the service you are calling doesn't support Azure AD access, put the connection string in Key Vault and use Azure.Identity to get it from there.

- Ensure you have logged in to your Azure account in Visual Studio or VS Code.

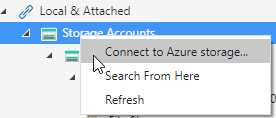

- In Storage Explorer, you can use AAD to connect by right-clicking on "Local & Attached / Storage Accounts" and choosing "Connect to Azure Storage..."

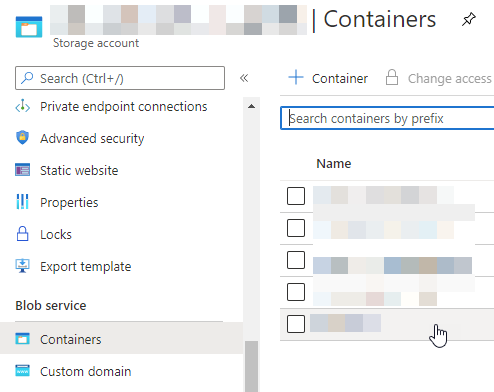

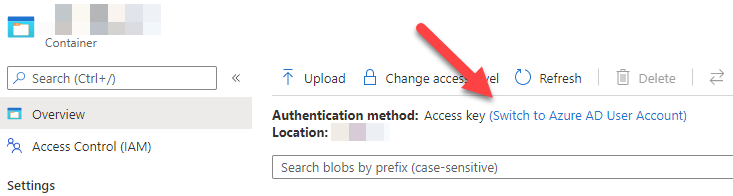

- In the Azure Portal, you can connect to storage using AAD by navigating to the container under "Blob service / Containers" and clicking on "Switch to Azure AD User Account"

- Identity is easy!